Preface and Acknowledgements

Peter W. Wood

President

National Association of Scholars

This report uses statistical analyses to provide further evidence that implicit bias theory, which radical activists have used to justify discriminatory and repressive “diversity, equity, and inclusion” (DEI) policies at all levels of government and in the private sector, has no scientific foundation. This report adds to and substantiates many previously published criticisms.

The National Association of Scholars (NAS) has been publicizing the dangers of the irreproducibility crisis for years, and now the crisis has played a major role in the imposition of DEI policies on the nation—and, most dangerously, in their attempted imposition on the realm of law and justice. Why does the irreproducibility crisis matter? What, practically, has it affected? Now our Exhibit A is the effects of implicit bias theory.

Before I explain implicit bias theory and its effects, I should explain the nature and the extent of the irreproducibility crisis. The crisis has had an ever more deleterious effect on a vast number of the sciences and social sciences, from epidemiology to education research. What went wrong in social psychology and implicit bias theory has gone wrong in a great many other disciplines.

The irreproducibility crisis is the product of improper research techniques, a lack of accountability, disciplinary and political groupthink, and a scientific culture biased toward producing positive results. Other factors include inadequate or compromised peer review, secrecy, conflicts of interest, ideological commitments, and outright dishonesty.

Science has always had a layer of untrustworthy results published in respectable places, as well as “experts” who were eventually shown to have been sloppy, mistaken, or untruthful in their reported findings. Irreproducibility itself is nothing new. Science advances, in part, by learning how to discard false hypotheses, which sometimes means dismissing reported data that does not stand the test of independent reproduction.

But the irreproducibility crisis is something new. The magnitude of false (or simply irreproducible) results reported as authoritative in journals of record appears to have dramatically increased. “Appears” is a word of caution, since we do not know with any precision how much unreliable reporting occurred in the sciences in previous eras. Today, given the vast scale of modern science, even if the percentage of unreliable reports has remained fairly constant over the decades, the sheer number of irreproducible studies has grown vastly. Moreover, the contemporary practice of science, which depends on a regular flow of large governmental expenditures, means that the public is, in effect, buying a product rife with defects. On top of this, the regulatory state frequently builds both the justification and the substance of its regulations upon the foundation of unproven, unreliable, and, sometimes, false scientific claims.

In short, many supposedly scientific results cannot be reproduced reliably in subsequent investigations and offer no trustworthy insight into the way the world works. A majority of modern research findings in many disciplines may well be wrong.

That was how the National Association of Scholars summarized matters in our report The Irreproducibility Crisis of Modern Science: Causes, Consequences, and the Road to Reform (2018).1 Since then we have continued our work toward reproducibility reform through several different avenues. In February 2020, we cosponsored with the Independent Institute an interdisciplinary conference on Fixing Science: Practical Solutions for the Irreproducibility Crisis, to publicize the irreproducibility crisis, exchange information across disciplinary lines, and canvass (as the title of the conference suggests) practical solutions for the irreproducibility crisis.2 We have also provided a series of public comments in support of the Environmental Protection Agency’s rule Strengthening Transparency in Pivotal Science Underlying Significant Regulatory Actions and Influential Scientific Information.3 Outside of this, we have publicized different aspects of the irreproducibility crisis by way of podcasts and short articles.4

And we have begun work on our Shifting Sands project. In May 2021, we published Keeping Count of Government Science: P-Value Plotting, P-Hacking, and PM2.5 Regulation. In July 2022, we published Flimsy Food Findings: Food Frequency Questionnaires, False Positives, and Fallacious Procedures in Nutritional Epidemiology. In July 2023, we published The Confounded Errors of Public Health Policy Response to the COVID-19 Pandemic.5 This report, Zombie Psychology, Implicit Bias Theory, and the Implicit Association Test, is the fourth of four that we will publish as part of Shifting Sands, each of which will address the role of the irreproducibility crisis in different areas of government policy. In these reports we address a central question that arose after we published The Irreproducibility Crisis:

You’ve shown that a great deal of science hasn’t been reproduced properly and may well be irreproducible. How much government regulation is actually built on irreproducible science? What has been the actual effect on government policy of irreproducible science? How much money has been wasted to comply with regulations that were founded on science that turned out to be junk?

This is the sixty-four-trillion-dollar question. It is not easy to answer. Because the irreproducibility crisis has so many components, each of which could affect the research that is used to inform government policy, we are faced with many possible sources of misdirection.

The authors of Shifting Sands include these just to begin with:

- malleable research plans;

- legally inaccessible datasets;

- opaque methodology and algorithms;

- undocumented data cleansing;

- inadequate or nonexistent data archiving;

- flawed statistical methods, including p-hacking;

- publication bias that hides negative results; and

- political or disciplinary groupthink.

Each of these could have far-reaching effects on government policy—and, for each of these, the critique, if well-argued, would most likely prove that a given piece of research had not been reproduced properly, not that it had actually failed to reproduce. (Studies can be made to “reproduce,” even if they don’t really.) To answer the question thoroughly, one would need to reproduce, multiple times, to modern reproducibility standards, every piece of research that informs governmental policy.

This should be done. But it is not within our means to do so.

What the authors of Shifting Sands did instead was reframe the question more narrowly. Governmental regulation (the focus of the first Shifting Sands reports) is meant to clear a high barrier of proof. Regulations should be based on a very large body of scientific research, the combined evidence of which provides sufficient certainty to justify reducing Americans’ liberty with a governmental regulation. What is at issue is not any particular piece of scientific research but, rather, whether the entire body of research provides so great a degree of certainty as to justify regulation. If the government issues a regulation based on a body of research that has been affected by the irreproducibility crisis so as to create the false impression of collective certainty (or extremely high probability), then, yes, the irreproducibility crisis has affected government policy by providing a spurious level of certainty to a body of research that justifies a governmental regulation.

The justifiers of regulations based on flimsy or inadequate research often cite a version of what is known as the “precautionary principle.” This means that, rather than basing a regulation on science that has withstood rigorous tests of reproducibility, they base the regulation on the possibility that a scientific claim is accurate. They do this with the logic that it is too dangerous to wait for the actual validation of a hypothesis and that a lower standard of reliability is necessary when dealing with matters that might involve severely adverse outcomes if no action is taken.

This report does not deal with the precautionary principle, since the principle summons a conclusiveness that lies beyond the realm of actual science. We note, however, that an invocation of the precautionary principle is not only nonscientific but is also an inducement to accept meretricious scientific practice, and even fraud.

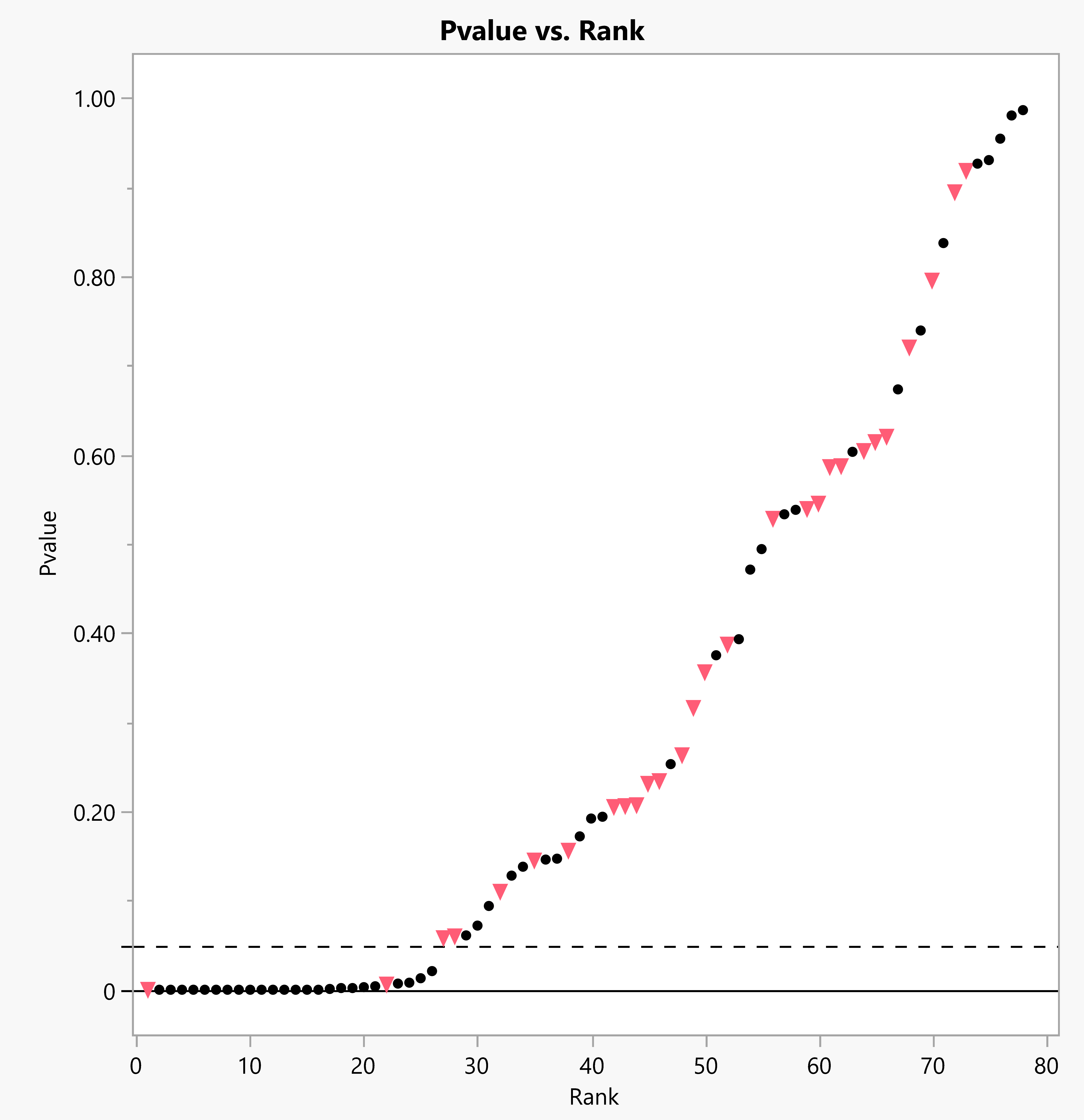

The authors of Shifting Sands addressed the more narrowly framed question posed above. They applied a straightforward statistical test, Multiple Testing and Multiple Modeling (MTMM), and applied it to a body of meta-analyses used to justify government research. MTMM provides a simple way to assess whether any body of research has been affected by publication bias, p-hacking, and/or HARKing (Hypothesizing After the Results are Known)—central components of the irreproducibility crisis.

In this fourth report, the authors applied this MTMM method to assess the validity of the Implicit Association Test (IAT), which is used to measure “implicit bias.” Two technical studies jointly provide further evidence that the IAT can find no significant relationship between IAT measurements and real-world behavior, either for sex or for race. The second study also shows that advocates of implicit bias theory ignore confounders—unexamined variables that affect the analyzed variables and that, when accounted for, alter their putative relationship—which explain real-world behavior far better than implicit bias. The two technical studies together provide strong evidence that any government or private policy that uses the IAT, or that depends on implicit bias theory, is using an instrument and a theory that have no relationship with the way people actually behave in the real world.

Zombie Psychology broadens our critique from federal agencies—the Environmental Protection Agency (EPA), the Food and Drug Administration (FDA), and the Centers for Disease Control and Prevention (CDC)—to all the levels of government, and private enterprises, that draw upon implicit bias theory and the IAT. In my previous introductions, I have written of the economic consequences of the irreproducibility crisis—of the costs, rising to the hundreds of billions annually, of scientifically unfounded federal regulations issued by the EPA and the FDA. I also have written about how activists within the regulatory complex piggyback upon politicized groupthink and false-positive results to create entire scientific subdisciplines and regulatory empires. Further, I have written, particularly with reference to COVID-19 health policy and the CDC, of the deep connection between the irreproducibility crisis and the radical-activist state by means of intervention degrees of freedom—the freedom of radical activists in federal bureaucracies to make policy, unrestrained by law, prudence, consideration of collateral damage, offsetting priorities, our elected representatives, or public opinion.

In Zombie Psychology, the Shifting Sands authors make clear that radical activists do not solely weaponize the irreproducibility crisis via federal regulatory agencies. They also work through state law, city regulation, and private sector policy. They seek to subordinate the operation of our courts to cooked “statistical associations”—and the extension of the irreproducibility crisis to our judicial system bids to be a worse threat to our liberty than the subordination of federal regulatory agencies. The irreproducibility crisis of modern science intermingles with every aspect of the radical campaign to revolutionize our republic.

Zombie Psychology, as its predecessors, suggests a series of policy reforms to address this aspect of the irreproducibility crisis. All of these are well-advised. But I want to pause for a moment, in this last of my Shifting Sands introductions, to consider some of the broader implications of these reports, as well as their connection with the NAS’s mission.

The Shifting Sands reports focus upon how the irreproducibility crisis has distorted public policy and upon public policy solutions to restore good government and protect individual liberty. The NAS’s ideal of virtuous citizenship motivates our broader concern with public policy. More specifically, we want to protect the republic at large from the grave errors of the academy. We believe ourselves morally obliged to alert the public to the role that academic scientists have played in entangling government policy with the irreproducibility crisis.

The recommendations in Shifting Sands have centered on ways to reform the machinery of government to end the politicized weaponization of the irreproducibility crisis. I am keenly aware that another strand of science policy reform centers instead on removing federal money entirely from the conduct of science, as the only effective way to bring about scientific reform—and I am aware not least because other NAS writings champion this strategy.6 Neither the NAS nor I are committed exclusively to either strategy. What I do believe is that American science policy needs drastic reform—and I am delighted that the NAS can present to policymakers and the public more than one option for effecting said reform.

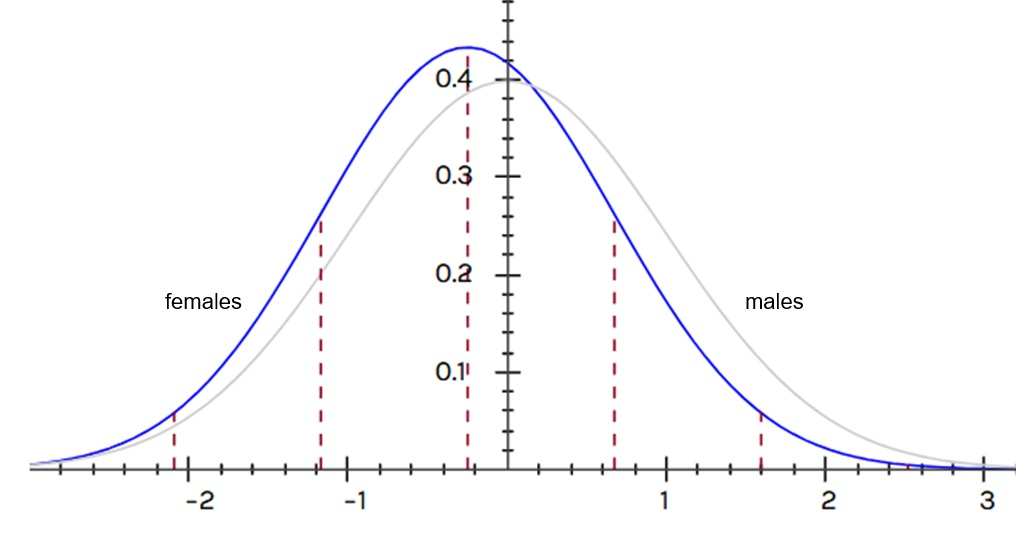

We also published the Shifting Sands reports to persuade the public not to defer too much to scientific authority. It isn’t crazy for laymen to think that experts know their own business and to think that government policymakers ought to listen to experts. But politicized and self-interested scientists have badly abused their so-called expertise for generations, to the detriment of public welfare and public liberty. The public needs the self-confidence to scrutinize so-called “expert judgment.” The Shifting Sands reports provide a tool throughout that any reader can use—a series of simple p-value plots in which a 45-degree line shows that the so-called expert science is bunkum. I am proud that Shifting Sands provides solid science that can be understood and used by every American citizen to hold the cabal of experts to account. I hope this will be a model for all science policy reports—that they will use representations of scientific research that are intuitive and easy to grasp and that serve the cause of American liberty and self-government.

The NAS, ultimately, seeks to reform the academy’s practice of science for its own sake. It isn’t good for scientists to stop searching for the truth and instead to work to change policy and receive grant money. It is a profound corruption. We work to reform the practices of scientists because we want them to return to their better angels—to seek truth, no matter where it leads, rather than to impose policies on their fellow citizens via the machinery of government. The NAS wishes to improve scientific practices as part of its larger goal to depoliticize the academy—which we work toward not least because it is bad for the souls of professors, and the soul of the academy, to seek power instead of truth.

And we do not do so by losing faith in science. The authors of Shifting Sands join the distinguished cadre of “meta-researchers,” and scientists working within their separate disciplines, who work to ameliorate the irreproducibility crisis by reforming the practice of science. Neither the NAS nor I think that science is the only means by which to seek and apprehend the truth. But science does provide a unique means of apprehending the truth, and the NAS and I are at one in our delight that science’s truth-seeking resources are at work to correct the pitfalls into which too many scientists have fallen. The way out of the shifting sands of modern science is not to reject science but to set it on a proper foundation. Shifting Sands has contributed to the great campaign of this generation of scientists, and I am proud that the NAS has been able to support this work.

*

Zombie Psychology puts into layman’s language the results of several technical studies by members of the Shifting Sands team of researchers, S. Stanley Young and Warren Kindzierski. Some of these studies have been accepted by peer-reviewed journals; others have been submitted and are under review. As part of the NAS’s own institutional commitment to reproducibility, Young and Kindzierski pre-registered the methods of their technical studies. And, of course, the NAS’s support for these researchers explicitly guaranteed their scholarly autonomy and the expectation that these scholars would publish freely, according to the demands of data, scientific rigor, and conscience.

Zombie Psychology is the fourth of four scheduled reports, each critiquing different aspects of the scientific foundations of government policy. The NAS intends these four reports, collectively, to provide a substantive, wide-ranging answer to the question What has been the actual effect on government policy of irreproducible science?

I am deeply grateful for the support of many individuals who made Shifting Sands possible. The Arthur N. Rupe Foundation provided the funding for Shifting Sands—and, within the Rupe Foundation, Mark Henrie’s support and goodwill got this project off the ground and kept it flying. David Acevedo copyedited Zombie Psychology with exemplary diligence and skill. David Randall, the NAS’s director of research, provided staff coordination for Shifting Sands—and, of course, Stanley Young has served as director of the Shifting Sands Project. Reports such as these rely on a multitude of individual, extraordinary talents.

Executive Summary

A great deal of modern scientific research uses statistical methods guaranteed to produce flawed statistics that can be disguised as real research. Scientists’ use of flawed statistics and editors’ complaisant practices both contribute to the mass production and publication of irreproducible research in a wide range of scientific disciplines.

This crisis poses serious questions for policymakers. How many federal regulations reflect irreproducible, flawed, and unsound research? How many grant dollars have funded irreproducible research? How widespread are research integrity violations? Most importantly, how many government regulations based on irreproducible science harm the common good?

The National Association of Scholars’s (NAS) project Shifting Sands: Unsound Science and Unsafe Regulation examines how irreproducible science negatively affects select areas of government policy and regulation. We also seek to demonstrate procedures that can detect irreproducible research.

Implicit bias theory is based on the Implicit Association Test (IAT) measurement. This fourth policy paper in the Shifting Sands project had two objectives: (1) to examine implicit bias theory and its use in practice, and (2) to perform two technical studies of the reproducibility and predictability of the IAT measurement in research.

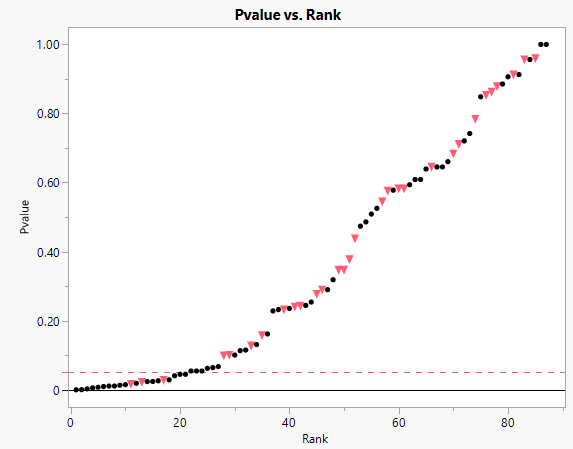

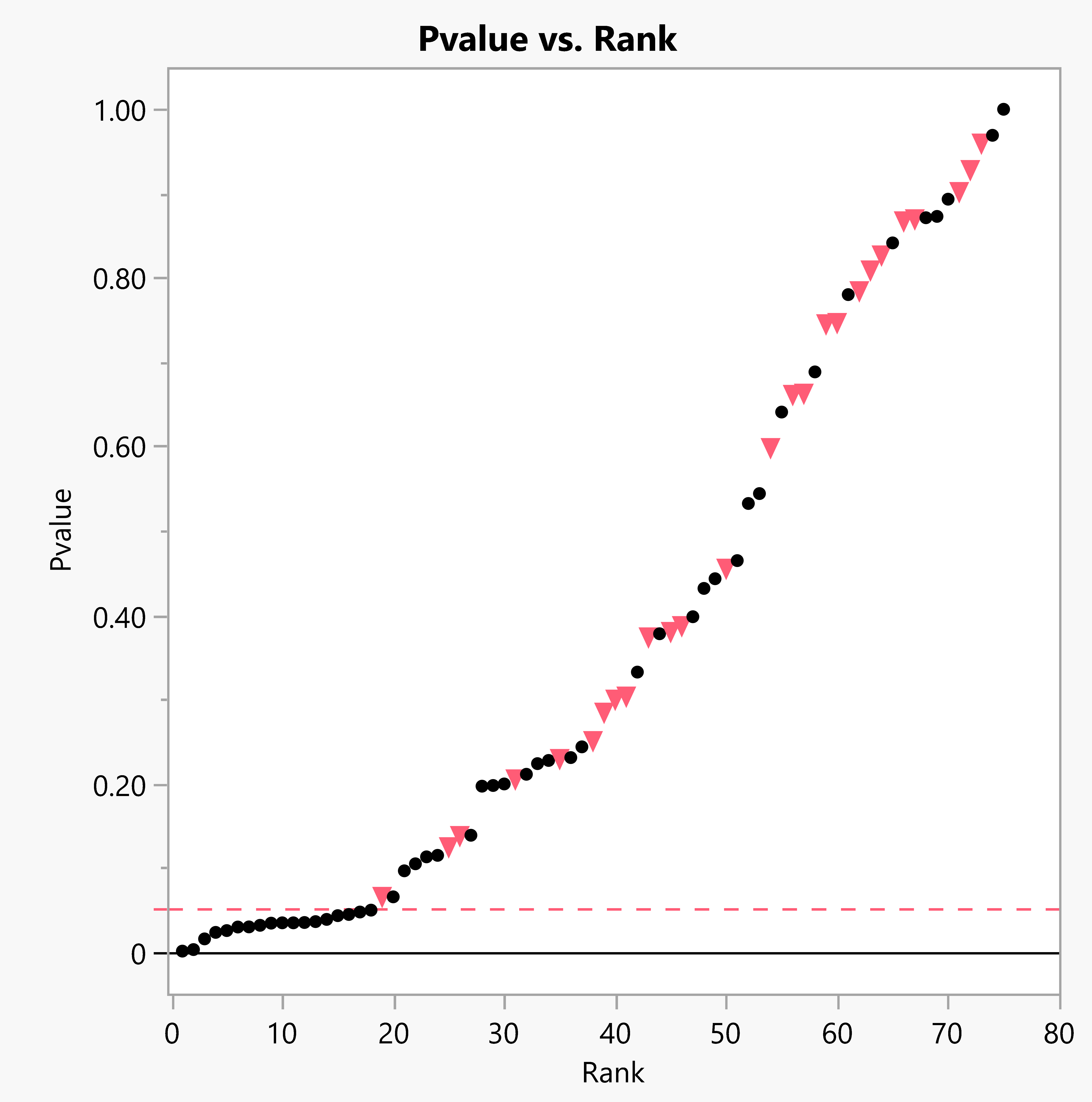

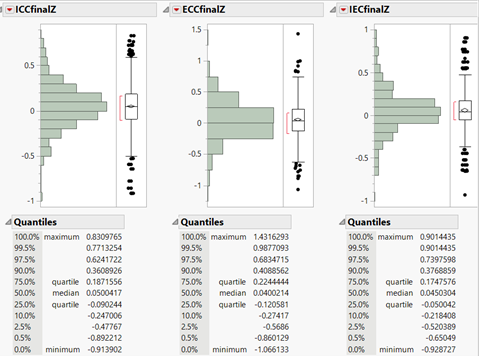

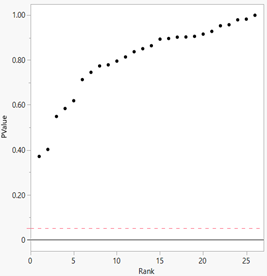

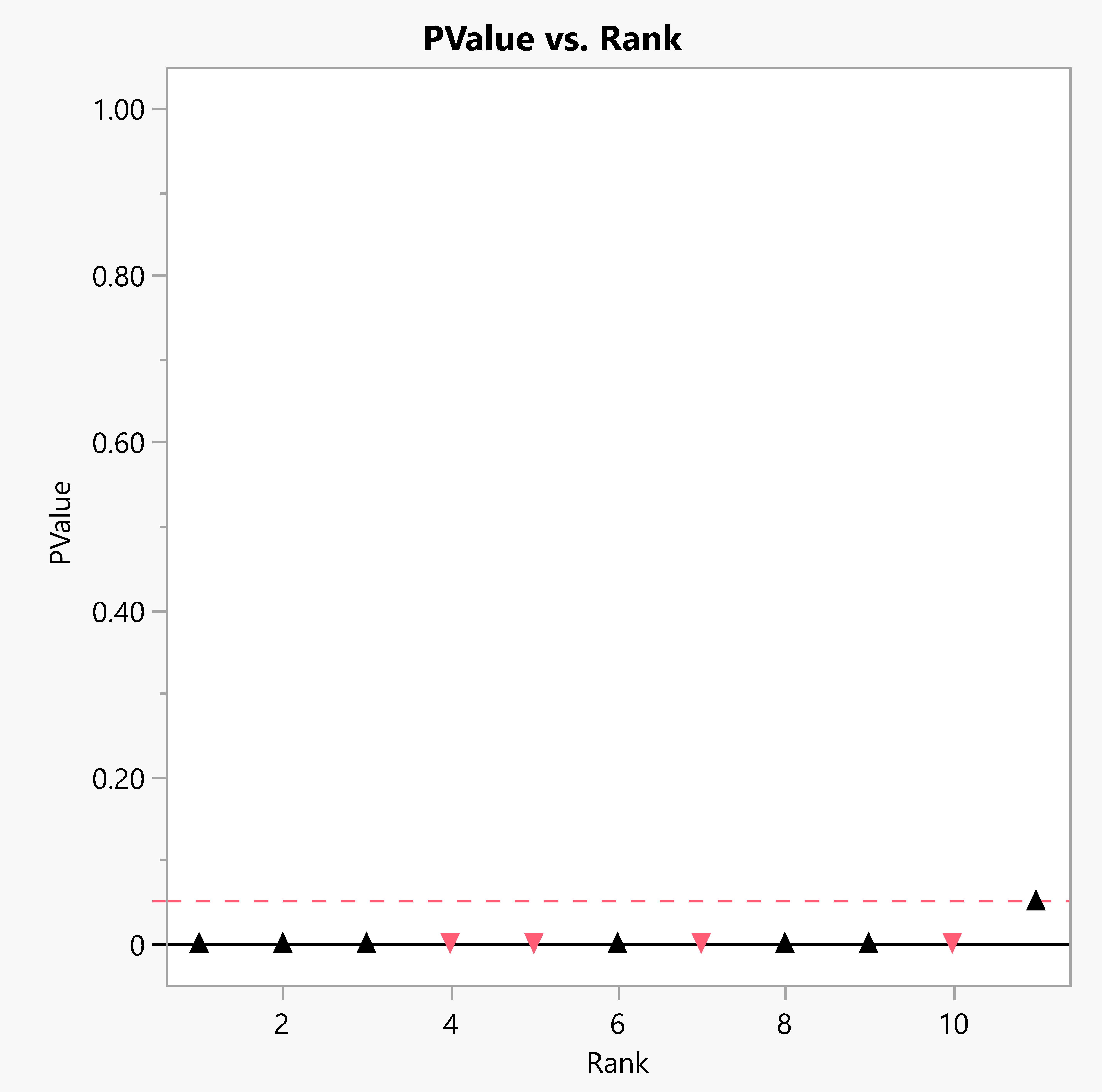

The two technical studies use a method—p-value plotting—as a severe test to assess the validity (and reproducibility) of specific research claims based on IAT measurements. The first technical study focused on assessing a research claim for IAT−real-world behavior correlations relating to racial bias of whites toward blacks in general.

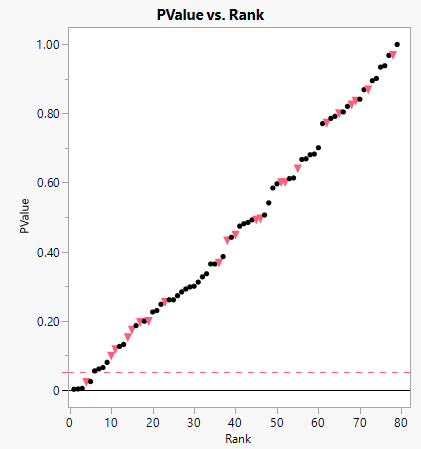

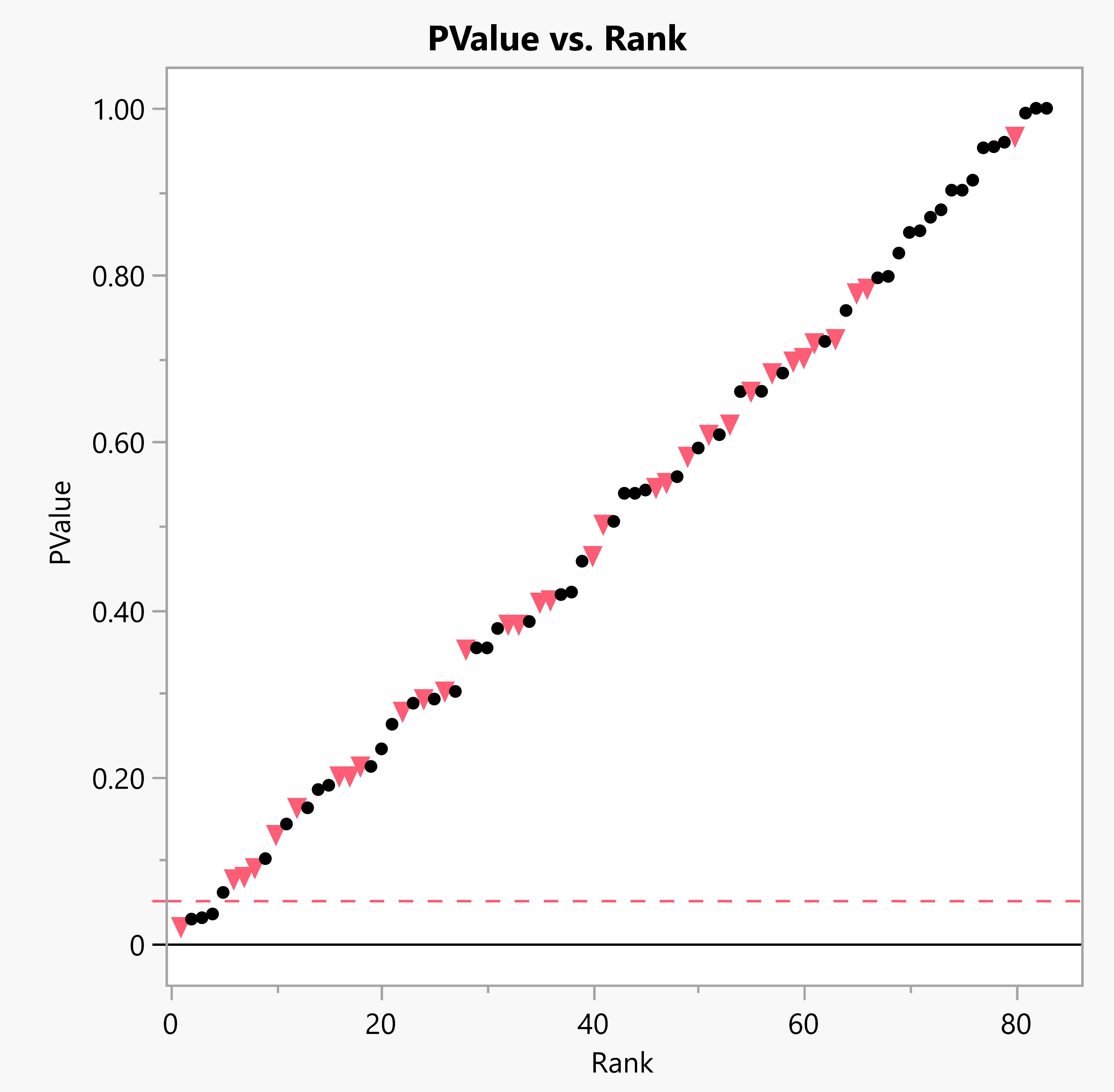

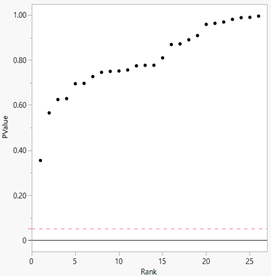

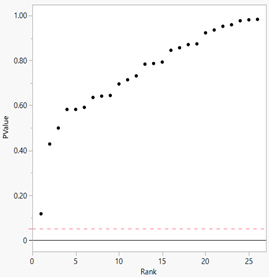

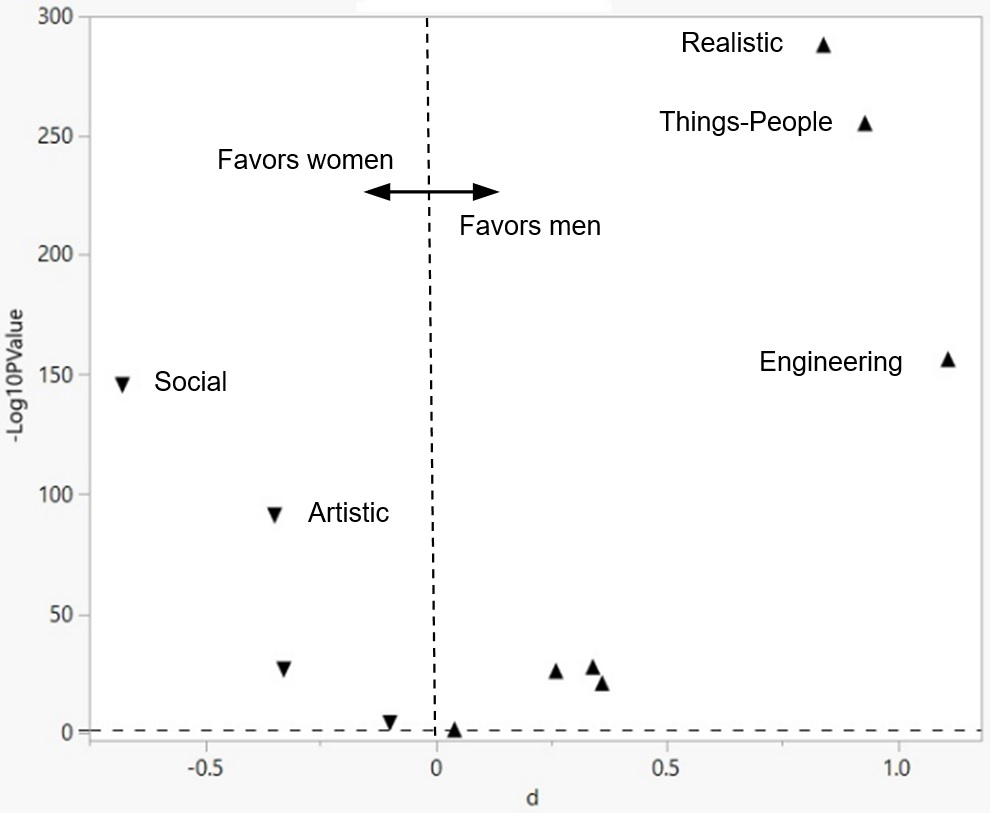

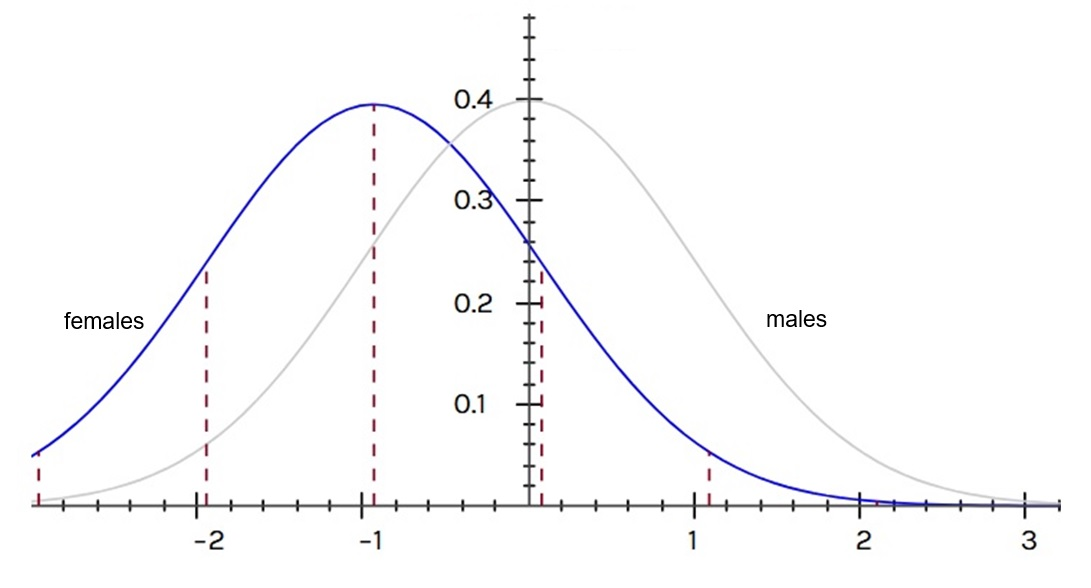

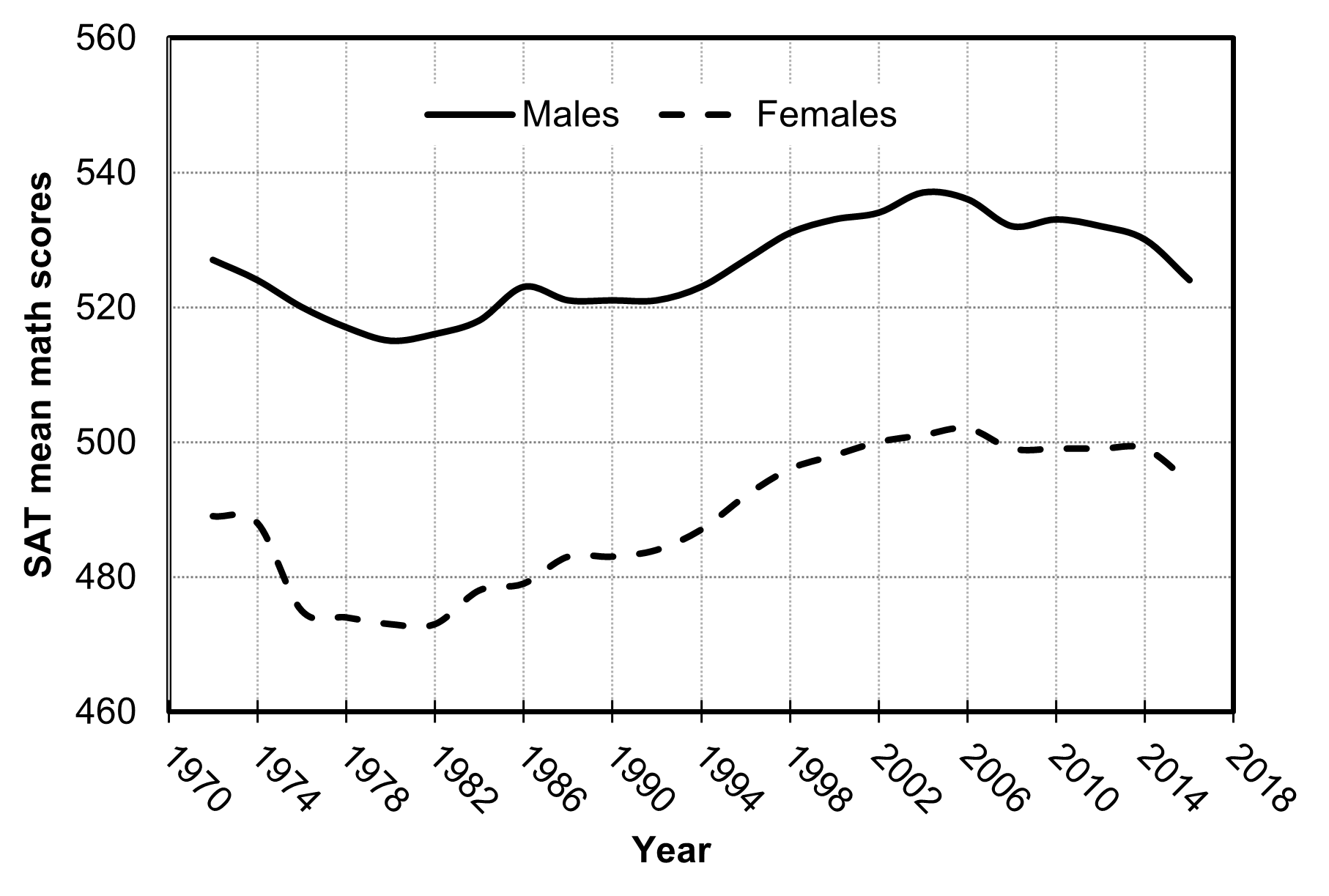

The second technical study focused on assessing a research claim for IAT−real-world behavior correlations relating to sex bias—bias of males toward females—in high-ability careers. The second study also looked at confounders—unexamined variables that affect the analyzed variables and that, when accounted for, alter their alleged relationship—that implicit bias theory should have considered and that further weaken that theory’s evidentiary basis.

Our examination of implicit bias theory and its use in practice finds that a growing number of researchers and legislators believe that policies based on this theory have been at best useless and at worst actively harmful. Furthermore, policies devised to reduce implicit bias seem to be either ineffective or counterproductive.

Our technical studies examining the use of the IAT measurement in research—the tool that supposedly best measures implicit bias—find that the IAT does not appear to measure implicit bias accurately or reliably. Our first technical study finds that there is a lack of correlation between the IAT measurement and real-world behaviors of whites toward blacks in general.

Our second technical study finds that there is a lack of correlation between the IAT measurement and real-world behaviors of males toward females in high-ability careers. The findings of both technical studies reinforce the notion that implicit bias measures have little or no ability to explain race and sex differences.

Policymakers at every level have introduced laws and regulations based on implicit bias theory—federal bureaucrats, governors, state lawmakers, city officials, executives of professional associations, and more. The citizens of a free republic should not allow such policies to rule them. Since implicit bias theory and its works have been revealed to be hollow pseudoscience, policymakers should work at once to remove the infringements of liberty undertaken in its name.

We offer four recommendations that are abstract principles designed to guide policymakers and the public in every venue to right the massive wrongs imposed by laws and regulations based on implicit bias theory. These are described further in the report:

- Rescind all laws, regulations, and programs based on implicit bias theory.

- Establish a federal commission to determine what grounds should be used to cite social science research (i.e., the field of research used to justify implicit bias theory and other like theories).

- Establish federal and state legislative committees to oversee social scientific support for proposed laws and regulations.

- Support education for lawyers and judges on the irreproducibility crisis, social science research, and best legal and judicial practices for assessing social science research and the testimony of expert witnesses.

We have subjected the science underpinning implicit bias theory to serious scrutiny. At present, we believe that policymakers and the public can enact substantial reform if they follow the principle that puts individual responsibility and the rule of the law above the hollow pseudoscience of implicit bias theory.

Governments should use the very best science—whatever the regulatory consequences. Scientists should use the very best research procedures—whatever results they find in the social scientific study of human behavior. Those principles are the twin keynotes of this report. The very best science and the very best research procedures require building evidence on the solid rock of transparent, reproducible, and actually reproduced scientific inquiry, not on shifting sands.

Introduction

Anthony Greenwald and Mahzarin Banaji, creators of the Implicit Association Test (IAT), held a press conference along with Brian Nosek in 1998 to publicize the IAT and announce the launch of the Project Implicit website. At the press conference, they claimed that the race IAT revealed unconscious prejudice that affects “90 to 95 percent of people.” Greenwald and Banaji expressed hope that “the test ultimately can have a positive effect despite its initial negative impact. The same test that reveals these roots of prejudice has the potential to let people learn more about and perhaps overcome these disturbing inclinations.”7 Publicity surrounding the publication of the first IAT study led to stories in Psychology Today, the Associated Press, and the New York Times. These articles generated further articles and further publicity for the IAT. Mitchell judges that “a review of the public record leaves little doubt that the seminal event in the public history of the implicit prejudice construct was the introduction of the IAT in 1998, followed closely by the launching of the Project Implicit website in that same year.”8

Implicit bias theory draws upon a series of pivotal psychology articles in the 1990s, above all the 1995 article by Anthony Greenwald and Mahzarin Banaji that first defined the concept of implicit or unconscious bias.9 These articles argued, drawing upon the broader theory of implicit learning,10 that individuals’ behavior was determined regardless of their individual intent, by “implicit bias” or “unconscious bias.” These biases significantly and pervasively affected individuals’ actions and were irremovable, or very difficult to remove, by conscious intent. Notably, researchers measured such biases in terms of race and sex—the categories of identity politics that fit with radical ideology and that were at issue in contemporary antidiscrimination law.

In 2020, Lee Jussim summarized the real-world context of implicit bias research and the IAT:

- The coiners of implicit bias made public claims far beyond the scientific evidence.

- Activists find implicit bias and implicit bias training politically useful, associated consultants find it financially lucrative, and bureaucrats find it useful as a way to address rhetorical and legal accusations of discriminatory behavior.

- Scientific and activist bias overstates the power and pervasiveness of implicit bias.11

In that same year, Jussim also noted that “defining associations of concepts in memory as ‘bias’ imports a subterranean assumption that there is something wrong with those associations in the absence of empirical evidence demonstrating wrongness.”12 Jussim added that

modern IAT scores cannot possibly explain slavery, Jim Crow, or the long legacy of their ugly aftermaths. Furthermore, there was nothing “implicit” about such blatant laws and norms. IAT scores in the present cannot possibly explain effects of past discrimination, because causality cannot run backwards in time. Thus, to whatever extent past discrimination has produced effects that manifest as modern gaps, modern IAT scores cannot possibly account for such effects. … To the extent that explicit prejudice causes discrimination, it may contribute to racial gaps. However, explicit prejudice is not measured by IAT scores.13

Gregory Mitchell and Philip E. Tetlock also noted in 2017 that

this degree of researcher freedom to make important societal statements about the level of implicit prejudice in American society, with no requirement that those statements be externally validated through some connection to behavior or outcomes, points to the potential mischief that attends a test such as the IAT that employs an arbitrary metric.14

These cautions by Jussim, Mitchell, and Tetlock have not been heeded. Laws and regulations based upon implicit bias theory now pervade American government and society and threaten to become omnipresent.

Reforming Government Regulatory Policy: The Shifting Sands Project

The National Association of Scholars’s (NAS) project Shifting Sands: Unsound Science and Unsafe Regulation examines how irreproducible science negatively affects select areas of government policy and regulation.15 We also aim to demonstrate procedures that can detect irreproducible research. We believe policymakers should incorporate these procedures as they determine what constitutes “best available science”—the standard that judges which research should inform government regulation.16

In Shifting Sands we use the same analysis strategy for all our policy papers⸺p-value plotting (a visual form of Multiple Testing and Multiple Modeling (MTMM) analysis)⸺as a way to demonstrate weaknesses in the government’s use of meta-analyses. MTMM corrects for statistical analysis strategies that produce a large number of false-positive statistically significant results—and, since irreproducible results from base studies produce irreproducible meta-analyses, MTMM allows us to detect these irreproducible meta-analyses.17

In other words, a great deal of modern scientific research uses statistical methods guaranteed to produce statistical hallucinations that can be disguised as real research. P-value plotting provides a means to simply look at the results of a body of research and see whether it is based on these statistical hallucinations.

In general, scientists are at least theoretically aware of the danger of the mass production of false-positive research results, although they have done far too little to correct their professional practices. Methods to adjust for MTMM have existed for decades. The Bonferroni method simply adjusts the p-value by multiplying the p-value by the number of tests. Westfall and Young provide a simulation-based method for correcting an analysis for MTMM.18

In practice, however, far too much “research” simply ignores the danger of the wholesale creation of false-positive results. Researchers can use MTMM until they find an exciting result to submit to the editors and referees of a professional journal—in other words, they can p-hack.19 Editors and referees, eager to publish high-profile, “groundbreaking” research, have an incentive to trust, far too credulously, that researchers have done due statistical diligence, so they can publish exciting papers and have their journal recognized in the mass media.20

Much contemporary psychological research is a component of this larger irreproducibility crisis, which has led to the mass production and publication of irreproducible research.21 Many improper scientific practices contribute to the irreproducibility crisis, including poor applied statistical methodology, incomplete or inaccurate data reporting, publication bias (the skew toward publishing exciting, positive results), fitting the hypotheses to the data after looking at the data, and endemic groupthink.22 Far too many scientists use these and other improper scientific practices, including an unfortunate portion who commit deliberate data falsification.23 The entire incentive structure of the modern complex of scientific research and regulation now promotes the mass production of irreproducible research.24

A large number of scientists themselves have lost overall confidence in the body of claims made in scientific literature.25 The ultimately arbitrary decision to declare p<0.05 as the standard of “statistical significance” has contributed extraordinarily to this crisis. Most cogently, Boos and Stefanski have shown that an initial result likely will not replicate at p<0.05 unless it possesses a p-value below 0.01, or even 0.001.26 Numerous other critiques concerning the p<0.05 problem have been published.27 Many scientists now advocate changing the definition of statistical significance to p<0.005.28 But even here, these authors assume only one statistical test and near-perfect study methods.

Researchers themselves have become increasingly skeptical of the reliability of claims made in contemporary published research.29 A 2016 survey found that 90% of surveyed researchers believed that modern scientific research was subject to either a major (52%) or a minor (38%) crisis in reliability.30 Begley reported in Nature that 47 of 53 research results in experimental biology could not be replicated.31 A coalescing consensus of scientific professionals realizes that a large portion of “statistically significant” claims in scientific publications, perhaps even a majority in some disciplines, is false—and certainly should not be trusted until such claims are reproduced.32

Shifting Sands aims to demonstrate that the irreproducibility crisis has affected so broad a range of government regulation and policy that government agencies (and private institutions and private enterprises) should now thoroughly modernize the procedures by which they judge “best available science.” Agency regulations should address all aspects of irreproducible research, including the inability to reproduce:

- the research processes of investigations;

- the results of investigations; and

- the interpretation of results.33

Our common approach supports a comparative analysis across different subject areas while allowing for a focused examination of one dimension of the effect of the irreproducibility crisis on government agencies’ policies and regulations.

Keeping Count of Government Science: P-Value Plotting, P-Hacking, and PM2.5 Regulation focused on irreproducible research in environmental epidemiology that informs the U.S. Environmental Protection Agency’s policies and regulations.34

Keeping Count of Government Science: Flimsy Food Findings: Food Frequency Questionnaires, False Positives, and Fallacious Procedures in Nutritional Epidemiology focused on irreproducible research in nutritional epidemiology that informs much of the U.S. Food and Drug Administration’s nutrition policy.35

Keeping Count of Government Science: The Confounded Errors of Public Health Policy Response to the COVID-19 Pandemic focused on the failures of the U.S. Centers for Disease Control and Prevention and the National Institutes of Health (NIH) to consider empirical evidence available in the public domain early in the pandemic. These mistakes eventually contributed to a public health policy that imposed substantial economic and social costs on the United States, with little or no public health benefit.36

Zombie Psychology

The first two Shifting Sands reports discussed the economic consequences of the irreproducibility crisis—the costs, rising to the hundreds of billions annually, of scientifically unfounded federal regulations issued by the Environmental Protection Agency (EPA) and the Food and Drug Administration (FDA)—and how activists within the regulatory complex piggyback upon politicized groupthink and false-positive results to create entire scientific subdisciplines and regulatory empires. The third Shifting Sands report brought into focus the deep connection between the irreproducibility crisis and the radical-activist state via intervention degrees of freedom—the freedom of radical activists in federal bureaucracies to make policy, unrestrained by law, prudence, consideration of collateral damage, offsetting priorities, our elected representatives, or public opinion.

This fourth and final Shifting Sands report explores how radical activists have used implicit bias theory as the justification for policies by the federal, state, and local government, and by American private institutions and enterprises, to remake American government and society. Most dangerously, as we shall see below, implicit bias theory is being used to corrupt the realm of law and justice by replacing individual proof of guilt with “proof” by statistical association and to thereby degrade the rule of law, due process, the presumption of innocence, and individual responsibility. It also corrupts the ideal of justice—that the courts shall give to each individual what he is due in law, as an irreducible component of the aspiration to provide justice to all mankind. Implicit bias is the tool of those who act to destroy American law and American justice.

Zombie Psychology provides an overview of the career of implicit bias theory and the IAT measurement, as well as an overview of the critiques of both the theory and the measurement. Zombie Psychology then summarizes two technical studies, which apply Multiple Testing and Multiple Modeling analysis to create p-value plots to assess the validity of the IAT. The first technical study focuses on assessing claims for IAT−real-world behavior correlations relating to race, and the second technical study focuses on assessing claims for IAT−real-world behavior correlations relating to sex. These two studies together provide further evidence that the IAT is insufficient for its two main uses of measuring implicit bias according to race and sex. The second study also highlights confounders—unexamined variables that affect the analyzed variables and that, when accounted for, alter their putative relationship—that implicit bias theory should have considered and that further weaken this theory’s evidentiary basis. We then conclude with recommendations for policy changes, to preserve American government and society—and above all our legal system—from policies based on implicit bias theory.

The Career of Implicit Bias Theory

Origins

“Implicit bias,” or “unconscious bias,” is partly a product of psychological science and partly a product of “antidiscrimination” advocates seeking a work-around on existing constitutional law. The Constitution prohibits individual discrimination; “implicit bias” provides a putatively scientific loophole to justify and to allow American institutions to discriminate.

As noted in the introduction, the psychology of implicit bias draws upon a series of pivotal psychology articles in the 1990s, above all the 1995 article of Anthony Greenwald and Mahzarin Banaji that first defined the concept of implicit or unconscious bias.37 These articles argued, drawing upon the broader theory of implicit learning,38 that individuals’ behavior was determined, regardless of their individual intent, by “implicit bias,” or “unconscious bias.” These biases were significant in their effects on individuals’ actions, pervasive, and irremovable, or very difficult to remove, by conscious intent. Significantly, researchers measured such biases in terms of race and sex—the categories of identity politics that fit with radical ideology and that were at issue in contemporary antidiscrimination law.

Greenwald and Banaji also promoted the Implicit Association Test as a way to measure implicit bias. The IAT is one a series of attempts by psychologists since ca. 1970 to find a way to avoid false self-reports and assess “true” individual bias. The IAT was meant to supersede the known frailties of earlier techniques, although it seems rather to have recapitulated them.39

Mitchell judges that “a review of the public record leaves little doubt that the seminal event in the public history of the implicit prejudice construct was the introduction of the IAT in 1998, followed closely by the launching of the Project Implicit website in that same year.”40 This publicity continued over the decades, notably including Banaji and Greenwald’s popularizing 2013 book, Blindspot: Hidden Biases of Good People.41 Greenwald et al. explicitly have sought to use their research to affect the operations of the law: “The central idea is to use the energy generated by research on unconscious forms of prejudice to understand and challenge the notion of intentionality in the law.”42 These researchers, and others of their colleagues, have acted in legal education, served as expert witnesses, promoted diversity trainings, promoted paid consulting services by Project Implicit, Inc., collected federal grant money, and more. In so doing, they have made bold but unsubstantiated claims about the solidity of implicit bias research and the importance of the effect of implicit bias. Acolytes have disseminated their arguments to audiences including the police, public defenders, human resource advisors, and doctors.43

Laws and regulations based upon implicit bias theory now pervade American government and society and threaten to become omnipresent.

Pervasive Adoption

Law

Radical advocates of implicit bias theory soon imported implicit bias research into the legal arena.44 It was a godsend to lawyers, and then to regulators and politicians, seeking to work around existing constitutional law. Existing antidiscrimination law had chipped away substantially at traditional legal conceptions of individual intent and responsibility, by means of “disparate impact”—the doctrine that a law, policy, or practice that disproportionately affects a protected group of people is illegal, regardless of whether the law, policy, or practice is intended to discriminate or has any other justification. Yet the legal precedents continued to affirm the importance of individual intent in large areas of antidiscrimination law. Federal antidiscrimination law generally requires proof of intent: “In most anti-discrimination cases, the plaintiff has the burden of proving the defendant acted with a purpose to discriminate, essentially eliminating an implicit bias claim.” The Supreme Court generally requires a high evidentiary standard for claims of discrimination.45

Implicit bias provided a new argument—that implicit biases were so strong that the law on individual intent was no longer sufficient. Kang took the evidence of implicit bias to justify a belief that “adding implicit bias to the story explains why even without such explicit racism, the segregation of the past can endure into the future simply by the actions of ‘rational’ individuals pursuing their self-interest with slightly biased perceptions driven by implicit associations we aren’t even aware of.”46 Grine further noted that “the tension between the science of implicit bias and the demands of the intent standard has become more evident in recent years, as social scientists have gained insights into the pervasiveness of implicit biases. … Such unconscious biases could produce discriminatory results in settings including health care, education, housing, and criminal justice.”47

The proposed answer was to change antidiscrimination law by replacing the intent standard with an implicit bias standard:

The intent standard does not arise from the text of the Equal Protection Clause or from the history of its adoption. The Davis Court embraced the standard based largely on a “floodgates” type of rationale: the Court was concerned that a broader understanding of discrimination “would be far-reaching and would raise serious questions about, and perhaps invalidate, [a wide range of laws].” … In adopting the intent standard, the Court effectively required consideration of the mind sciences in order to uphold the guarantee of equal protection under the law. It is therefore necessary to take proper account of the latest research in the mind sciences when interpreting discrimination claims raised under the Equal Protection Clause.48

The implicit bias standard would allow lawyers to seize on the law, stating that a “hostile environment” is an actionable offense under antidiscrimination law. Implicit bias raises any inequity, not least those detected by a statistical study, to be evidence of implicit bias and, hence, a hostile environment. To adopt an implicit bias standard would replace individual intent with statistical associations—disparate impact—in antidiscrimination law.

Such changes already have begun to be recognized in American legal systems. Vermont antidiscrimination law, for example, recognizes “unintentional discrimination,” which includes “microaggressions, unconscious biases, and unconsciously held stereotypes.”49

The implications of this change are extraordinary. As Blevins notes, “if government can ban individual judgment just because that judgment might be faulty, then we’ve abandoned the basic premise of limited government.”50 Kang’s rhetorical flourish of a conclusion is more telling: “We’ve met the enemy, and it is us.” His turn of phrase should be taken seriously: implicit bias justifies policies that regard the American people as enemies, in perpetuity, even absent any intent to discriminate, who should be treated in law as enemies rather than as citizens.51

And implicit bias theory already has proceeded from legal theory to actual law and regulation.

Equal Employment Opportunity Commission

No single agency authorized the recognition and the use of implicit bias in law and regulation. Yet a very important stage in the legal recognition of implicit bias was the Equal Employment Opportunity Commission’s (EEOC) 2007 initiative Eradicating Racism and Colorism from Employment, which gave legal recognition to implicit bias—and prescribed diversity training to employers as a means to address it. The EEOC filed discrimination lawsuits based on unconscious bias against Walmart and Walgreens.52 Although the suit against Walmart ultimately failed, the Walgreens suit was successful: the company settled for $24 million.53

The EEOC’s adoption of implicit bias gave lawyers a very important support in their legal use of implicit bias in the courts, if not a guaranteed victory. But few corporations have the resources or the persistence of Walmart. Corporations who wished to avoid the expenses and risks of a lawsuit were advised to adopt diversity trainings preemptively as a means to protect themselves against legal liability for “unconscious bias.”

How are prudent employers to prevent ‘unconscious bias’ or ‘subtle’ racism? How do you protect your company from a potentially devastating class action lawsuit like the one being faced by Walgreens? The best answers come from a non-binding ‘Guidance’ issued on the subject of race and color discrimination last year by the EEOC. This very broad Guidance, which suggests employers need to take serious action and affirmative steps to eradicate race discrimination, demonstrated how seriously the EEOC takes race and color discrimination. … employers must be attuned to the subtle and unconscious ways that race and color stereotypes and bias can negatively affect all aspects of an individual’s employment, such as networking, mentoring, etc.54

The power of federal antidiscrimination law has accelerated “voluntary” adoptions of implicit bias as a concept and implicit bias tests as a practice, even absent direct legislative and administrative requirements. The cost-benefit analysis of myriad private actors disseminated implicit bias training and ideology, as a low-cost way to avoid the possibility of an antidiscrimination lawsuit.

And, of course, the EEOC has continued to press its understanding of implicit bias on corporations. In 2021, the EEOC launched “a diversity, equity, and inclusion (DE&I) workshop series, starting with ‘Understanding Unconscious Bias in the Workplace.’”55 Corporations acquiesce to implicit bias theory and practice in the face of continuing pressure from the EEOC.

Implicit Bias Policies

Implicit bias has entered American policy diffusely, by means of a variety of federal administrative actions, state statutes, state administrative requirements, local ordinances, and private sector policies. Implicit bias policies advanced steadily until 2020, when their imposition accelerated significantly in the wake of the George Floyd riots. A widening number of states and localities have required “implicit bias” and “unconscious bias” trainings, in the legal profession, the police, the medical professions, and more. Academics, moreover, are preparing the way to use “implicit bias” in ever more extensive areas of American life, including the jury system, the operation of the courts, and all areas of government policy, from the health system to the schools to real estate licensure.

Government Legal Personnel

In 2016, the U.S. Department of Justice (DOJ) announced that it would “train all of its law enforcement agents and prosecutors to recognize and address implicit bias as part of its regular training curricula.” This requirement would immediately affect more than 28,000 department employees in the FBI, the Drug Enforcement Administration, the Bureau of Alcohol, Tobacco, Firearms and Explosives, the U.S. Marshals Service, and the ninety-four U.S. Attorney’s Offices. The DOJ planned to eventually train its other personnel, including prosecutors in the department’s litigating components and agents of the Office of the Inspector General. This program expanded on the DOJ’s existing work, since 2010, to provide implicit bias training to state and local law enforcement personnel, via the Office of Community Oriented Policing Services’ Fair and Impartial Policing program.56 At the state level, in 2020 the New Hampshire Office of the Attorney General required implicit bias training “for all attorneys, investigators, legal staff and victim/witness advocates in the Attorney General’s Office, all County Attorney Offices, all state agency attorneys, and all prosecutors, including police prosecutors.”57

Courts

The National Center for State Courts wrote that implicit bias justified new court policies, including policies to “provide routine diversity training that emphasizes multiculturalism and encourage court leaders to promote egalitarian behavior as part of a court’s culture,” “routinely check thought processes and decisions for possible bias,” and “assess visual and auditory communications for implicit bias.”58 California’s biennial training for judges and subordinate judicial officers now includes “a survey of the social science on implicit bias, unconscious bias, and systemic implicit bias, including the ways that bias affects institutional policies and practices,” “the administration of implicit association tests to increase awareness of one’s unconscious biases based on the characteristics listed in Section 11135,” and “inquiry into how judges and subordinate judicial officers can counteract the effects of juror implicit bias on the outcome of cases.”59 Bills to mandate judiciary implicit bias requirements also have been introduced in New Jersey and Texas.60

Jurors

Radical activists also have used implicit bias arguments to justify calls for systematic changes to jury selection and juror decision-making.61 Su argues for some version of implicit bias training for jurors.62 In 2022, Colorado’s General Assembly considered a bill that would allow “courts and opposing counsel to raise objections to the use of peremptory challenges with the potential to be based on racial or ethnic bias in criminal cases.” 63

Legal Profession

Bienias argues that implicit bias pervades the legal profession. His prescribed policies therefore include an administration of the Implicit Association Test and “behavioral changes” to “interrupt” one’s own implicit biases.64 Bienias further recommends “structural changes” in response to implicit bias, including a “commitment by management to diversity,” implicit bias training, a “commitment to women in counter-stereotypical roles,” and mentoring. Bienias argues that one’s active participation in opposing “unconscious bias” is necessary: “Good intentions are not enough; if you are not intentionally including everyone by interrupting bias, you are unintentionally excluding some.”65

Police

The U.S. Department of Justice’s Understanding Bias: A Resource Guide’s Community Relations Services Toolkit for Policing presumes the existence of implicit bias and recommends “positive contacts with members of that group [toward whom one displays implicit bias] … through ‘counter-stereotyping,’ in which individuals are exposed to information that is the opposite of the stereotypes they have about a group,” increasing “cultural competency,” and using the Implicit Association Test (IAT).66 California statute now requires that police training include a diversity education component: “The curriculum shall be evidence-based and shall include and examine evidence-based patterns, practices, and protocols that make up racial or identity profiling, including implicit bias.” A Racial and Identity Profiling Advisory Board, moreover, shall annually “conduct, and consult available, evidence-based research on intentional and implicit biases, and law enforcement stop, search, and seizure tactics.”67 Illinois’s curriculum for probationary law enforcement officers, as well as its triennial in-service training requirements, now must include “cultural competency, including implicit bias and racial and ethnic sensitivity.”68 In 2020, New Jersey Governor Phil Murphy signed legislation requiring law enforcement officers to take implicit bias training as part of their cultural diversity training curriculum.69 In 2022, the New Jersey General Assembly passed two further bills that would require police officers to undergo diversity and implicit bias training.70 Bills to mandate implicit bias requirements in policing have also been introduced in Georgia, Indiana, New Jersey, South Carolina, and South Dakota.71

Education

The U.S. Department of Education’s Guiding Principles: A Resource Guide for Improving School Climate and Discipline (2014) recommends that, “to help ensure fairness and equity, schools may choose to explore the use of cultural competence training to enhance staff awareness of their implicit or unconscious biases. … Where appropriate, schools may choose to explore using cultural competence training to enhance staff awareness of their implicit or unconscious biases and the harms associated with using or failing to counter racial and ethnic stereotypes.”72 Illinois statute now requires that school personnel take implicit bias training.73 In 2020, New Jersey required K–12 schools to include instruction on unconscious bias as part of its mandated instruction in “diversity and inclusion.”74 The New York City Department of Education holds Implicit Bias Awareness workshops: “For the past five years, over 80,000 NYC educators have attended the Implicit Bias Awareness foundational workshop, either in-person or virtually.”75 The New York City Department of Education’s Implicit Bias Team has twelve staff.76 Bills to mandate implicit bias requirements in education have also been introduced in New Jersey and Texas.77

General Government

In 2021, President Biden’s Executive Order on Diversity, Equity, Inclusion, and Accessibility in the Federal Workforce required the head of each federal agency to use training programs in order for “Federal employees, managers, and leaders to have knowledge of systemic and institutional racism and bias against underserved communities, be supported in building skillsets to promote respectful and inclusive workplaces and eliminate workplace harassment, have knowledge of agency accessibility practices, and have increased understanding of implicit and unconscious bias.”78 The U.S. State Department requires unconscious bias training for its Foreign Service selection panels, all supervisors and managers, and all Foreign Service Selection Boards and Bureau Awards coordinators.79 The Central Intelligence Agency also has imposed “unconscious bias training.”80 At the state level, the New Jersey General Assembly passed two bills in 2022 that would require state lawmakers to undergo diversity and implicit bias training.81 At the local level, Columbus, Ohio now offers a citywide training course in implicit bias,82 while San Francisco’s Ordinance 71-19 requires members of city boards and commissions and city department heads to complete the Department of Human Resources’s online implicit bias training.83

Medical

Implicit bias policies have been mandated particularly intensively in the medical fields. The Institute of Medicine, now known as the National Academy of Medicine, lent credibility to implicit bias policies in medicine in its Unequal Treatment: Confronting Racial and Ethnic Disparities in Health Care (2003), which stated that implicit bias contributed to minority populations’ poorer health outcomes.84 At the federal level, the Centers for Disease Control and Prevention prescribes implicit bias training as a way to achieve so-called “health equity” and recommends that employers “train employees at all levels of the organization to identify and interrupt all forms of discrimination.”85 Since 2019, often prompted by the supposedly disparate effects of COVID-19,86 California,87 Illinois,88 Maryland,89 Massachusetts,90 Michigan,91 Minnesota,92 New York,93 and Washington94 have all mandated implicit bias training for some or all health care workers as a prerequisite for professional licensure or renewal.95 Bills to mandate medical implicit bias requirements also have been introduced in Indiana, Nebraska, New Jersey, New York, North Carolina, Oklahoma, South Carolina, Tennessee, Texas, Vermont, Virginia, and West Virginia.96 Medicals schools such as Harvard Medical School, the Icahn School of Medicine at Mount Sinai in New York, and the Ohio State University College of Medicine have begun to offer or require implicit bias training, independent of state requirements.97

Science

At the federal level, in 2015 the Office of Science and Technology Policy and the Office of Personnel Management established the Interagency Policy Group on Increasing Diversity in the STEM Workforce by Reducing the Impact of Bias (IPG). The IPG catalogued and/or recommended unconscious bias training and implicit bias training throughout the government agencies that hire scientific workforces, including the National Institutes of Health, the Department of Energy, and NASA. The NIH was also going to apply implicit bias evaluations to its R01 grant awards. The IPG, moreover, recommended:

Proactive Use of Diversity, Equity, or Inclusion Grants: Institutions can pursue programmatic support to develop and employ policies, practices, training materials, and recruitment and retention strategies designed to mitigate any bias in higher education.

Recommended solutions to alleged sex and race bias in grant reviews included “educational intervention on implicit bias,” which, the IPG claims, “reduced faculty members’ implicit bias regarding women and leadership (as measured by the Implicit Association Test).”98 The IPG also stated that the Department of Energy

will develop and promote “bias interrupters” as a resource for managers and employees who complete training related to mitigating the impact of bias. The “bias interrupters” will be tips and practices that employees can use to improve the objectivity and quality of decisions related to hiring, promotions, career development opportunities, and performance appraisals.99

Real Estate

In 2021, California added implicit bias training to the licensure requirements for real estate brokers and real estate salesmen—both for initial applicants and for continuing education requirements—mandating that a “three-unit semester course, or the quarter equivalent thereof,” include instruction on “real estate practice, which shall include a component on implicit bias, including education regarding the impact of implicit bias, explicit bias, and systemic bias on consumers, the historical and social impacts of those biases, and actionable steps students can take to recognize and address their own implicit biases.”100 A similar bill to mandate implicit bias requirements in real estate also has been introduced in Oregon.101

Camouflaging Radical Policy

As the policies listed above suggest, implicit bias is also frequently used as a tool with which to wield antidiscrimination law in order to mandate equal outcomes for all identity groups. An increasing number of regulations and statutes loosely refer to “implicit bias” or “unconscious bias” to justify their imposition of radical egalitarian and illiberal ideology and policy on Americans. Such regulations and statutes have particularly targeted lawyers, the police, and health care personnel, but they already affect, or potentially affect, virtually every sort of state licensure. Such requirements directly divert increasingly large amounts of private funds and taxpayer dollars to pay for required “anti-bias” trainings. They also create an institutional beachhead for the radical and discriminatory identity politics ideology sometimes referred to as critical race theory (CRT) or diversity, equity, and inclusion (DEI).

Implicit bias is also an intellectual engine that has significant legal advantages. When the Trump administration issued an executive order banning CRT, along with all other discriminatory ideologies, the Labor Department issued a ruling stating that implicit bias trainings were not banned, since they were not discriminatory. Implicit bias, which justified many of these policies in the first place, has been set up as a means to preserve the heart of CRT and DEI in the face of legal bans.

Implicit bias is used to justify a wide range of intrusive, ideologically motivated DEI policies, as well as to dismiss criticism of these policies as yet more “implicit bias”:

Organizations should (a) use trainings to educate members of their organizations about bias and about organizational efforts to address diversity, equity, and inclusion; (b) prepare for, rather than accommodate, defensive responses from dominant group members; and (c) implement structures that foster organizational responsibility for diversity, equity, and inclusion goals; opportunities for high-quality intergroup contact; affinity groups for underrepresented people; welcoming and inclusive messaging; and processes that bypass interpersonal bias. …

Because of staunchly held narratives of meritocracy and fairness, the idea that organizations or American society might be unfair is challenging for many people to accept, especially members of dominant or well-represented groups. … majority group members often resist information about inequality by justifying or holding onto misperceptions of inequality. … These defensive responses also extend to support for policies. When exposed to information documenting stark racial disparities in the prison system, Whites report higher support for punitive crime policies, which produce these disparities. … As organizations launch their diversity initiatives, they should be prepared for potential reactance and expect some defensive responses. Organizations can plan in advance to document how defensiveness manifests and to respond to defensiveness by correcting misperceptions; linking diversity efforts to the organization’s mission, values, and goals; and providing incentives for reaching diversity targets. … Rather than just hosting trainings about implicit bias, organizations might consider offering activities that focus directly on helping majority group attendees recognize and address potential defensiveness.102

In 2020, supporters of mandatory diversity training noted with relief that unconscious and implicit bias trainings would not be prohibited by the Trump administration’s executive order banning government trainings that contained discriminatory concepts.

Covered contractors can continue to implement unconscious and implicit bias trainings so long as the trainings are not blame-focused or targeting specific groups, and instead broadly address the development of biases, how they manifest themselves in our daily lives and how we can combat biases. … There are plenty of other workplace diversity and inclusion trainings and dialogues that the EO does not appear to prohibit, such as those involving cultural competence, generational diversity, harassment, microaggressions, communications across differences, mindfulness and trainings unrelated to race or gender, to name a few.103

In general, implicit bias is the engine for laws that might technically skirt bans on overtly discriminatory policies.

Pushback

Since 2021, some state legislators have begun to push back against implicit bias theory. In New Hampshire, a 2021 bill sought to prohibit divisive concepts such as unconscious bias.104 In 2022, Florida enacted a law to prohibit trainings that teach that “an individual, by virtue of his or her race, color, sex, or national origin, is inherently racist, sexist, or oppressive, whether consciously or unconsciously.”105 In 2021, Tennessee enacted a law that restricted what public school teachers could discuss in Tennessee classrooms about racism, so-called “white privilege,” and unconscious bias.106 In 2023, another Tennessee bill was introduced that would prohibit implicit bias training in Tennessee public schools, Tennessee colleges and universities, the Tennessee Department of Education, and the State Board of Education.107 Bills to prohibit implicit bias requirements also have been introduced in Arizona, Arkansas, Missouri, Utah, and West Virginia.108 The resistance to implicit bias theory, however, is much newer and smaller than the campaign to impose it.

Prospects

Implicit bias theory is altering American government and society piecemeal, through a host of individual laws and regulations enacted by federal, state, and local governments, as well as by private institutions and private businesses. These initiatives now impose implicit bias trainings, implicit association tests, diversity trainings, and a wide variety of other requirements on millions of Americans. The gravest consequence is the spread of implicit bias theory to our legal system, which threatens to replace individual intent in antidiscrimination law with disparate results—statistical associations showing “inequitable outcomes.” Implicit bias theory already pervades America; its proponents are working with all-too-great success to make it omnipresent.

Implicit bias theory has gone from success to success in the political world. At the same time, a large number of researchers have subjected it to devastating intellectual critique. Virtually every aspect of implicit bias theory may lack empirical substantiation.

The Emperor Has No Clothes: Critiques of Implicit Bias Theory

Unsteady Foundations

The most profound critiques of implicit bias theory are broader critiques of psychology as a whole, and especially of the subdiscipline of social psychology.

Psychology as a discipline embraced statistics early. Psychologists in the nineteenth century, who aspired to make psychology a science, sought to use quantitative methods to make universal statements in the study of the mind. Psychologists therefore seized on statistics early, as a means of quantification that offered a way to make bold scientific arguments that acknowledged the inescapable fact that human minds varied.

Yet even beyond the fundamental critique that the ambition to make such universal statements may have no real-world foundation,109 psychology’s statistical revolution has been troubled. Psychologists played a prominent role in the unwieldy marriage of R. A. Fisher’s approach to statistics and the “frequentist” approach derived from the work of Jerzy Neyman and Egon Pearson. Theoretical inconsistency about how to treat p-values fueled a “practical” ability to design psychological-statistical experiments. Psychology suffers as a discipline from running experiments with small sample sizes, and hence low statistical power—a low probability of a significance test detecting a true effect. The irreducible difficulties in defining mental characteristics, much less in establishing their comparability from individual to individual, limit the discipline’s ability to conduct rigorous statistical experiments. It also embraces the loose definition of statistical significance at p ≤ 0.05, rather than the tighter definitions embraced by other disciplines—some branches of physics, for example, use the “five-sigma” standard of p ≤ 0.00006. The social psychology subdiscipline appears to be unusually subject to politicized groupthink. For all of these reasons, psychology, and especially social psychology, has been unusually afflicted by the irreproducibility crisis. Any psychological research conclusion based upon statistical techniques warrants especially close scrutiny of its methodological foundations.110

With particular reference to implicit bias theory, Chin further noted in 2023 that behavioral priming research, a subset of social psychology, has been largely discredited. The general discrediting of behavioral priming particularly discredits the basic framework that justifies the argument behind the use of implicit bias training to reduce prejudicial behavior:

If priming (e.g., activating concepts like race and hostility) does not affect judgments and behavior, it seems unlikely that changing people’s automatic associations will be useful in reducing either those judgments and behavior, or tendencies that should be even harder to change, such as discrimination in legal judgments. Stated differently, if automatic associations do not predict behavior, then attempting to change someone’s IAT score to change their behavior—if that is possible—seems futile.111

None of these broader critiques directly address implicit bias theory itself. Yet all of them should, at the very least, give policymakers pause before they make regulations or laws based on any theory from psychology, and in particular from social psychology.

But these broader critiques are accompanied by a great many further critiques that specifically address implicit bias theory.

Critiques of Implicit Bias Theory

Policy based on implicit bias theory has spread throughout America even as implicit bias theory’s intellectual underpinnings have come under sustained and devastating assault. Implicit bias never acquired consensus support from psychologists—some published articles argued for its validity, while others then critically examined the evidence and the theory. As it so happens, an increasing number of psychologists have provided evidence for devastating problems with implicit bias theory.

Collectively, these critiques call into doubt virtually every aspect of implicit bias theory. It is best to list them individually, to give a full sense of how comprehensively they demolish implicit bias theory. The different critiques of implicit bias theory include the following:

- Andreychik (2012) provides evidence that some “negative” implicit evaluative associations register empathy rather than prejudice;112

- Arkes (2004) offers three objections to the argument that the IAT measures implicit prejudice: “(a) The data may reflect shared cultural stereotypes rather than personal animus, (b) the affective negativity attributed to participants may be due to cognitions and emotions that are not necessarily prejudiced, and (c) the patterns of judgment deemed to be indicative of prejudice pass tests deemed to be diagnostic of rational behavior”;113

- Cone (2017) reviews evidence that “implicit evaluations can be updated in a durable and robust manner,” which undermines the presumption that implicit bias cannot be overcome and therefore has deep and enduring effects;114

- Corneille (2020a) reviews evidence that undermines the presumption behind implicit bias that “an associative/affective formation of attitudes and fears” mode of learning exists, “defined as automatic and impervious to verbal information”;115

- Corneille (2020b) concludes that scholars have used inconsistent definitions of “implicit” and recommends using a new terminology, to prevent confusion;116 following up, Corneille (2022) recommends that the terminology of “implicit bias” be replaced with “unconscious social categorization effects”;117

- Cyrus-Lai (2022) provides evidence that there is no significant employment bias against women and that, at present, “social cue-based explicit and implicit behavioral biases could be pro-male, pro-female, anti-Black, pro-Black, and so forth.” Cyrus-Lai (2022) also provides evidence that groupthink among academics has predisposed them to expect bias against women;118

- Jussim (2018) argues that stereotype accuracy, the “flip side” of implicit bias that studies the same subject matter but with reversed conclusions, has far greater support in psychological research than does implicit bias research;119 and Jussim (2020a) adds that “the associations tapped by the IAT may reflect not just cultural stereotypes, but implicit cognitive registration of regularities and realities of the social environment.”120

- Rubinstein (2018) presents data providing evidence that “individuating information can reduce or eliminate stereotype bias in implicit and explicit person perception” and that “patterns of reliance on stereotypes and individuating information in implicit and explicit person perception generally converged”;121 and

- Skov (2020) reviews evidence of unconscious/implicit sex bias in academia and concludes that “ascribing observed gender gaps to unconscious bias is unsupported by the scientific literature.”122

A further body of scholarly literature reviews the evidence critiquing implicit bias theory.123 In 2019, Gawronski observed that

(a) There is no evidence that people are unaware of the mental contents underlying their implicit biases; (b) conceptual correspondence is essential for interpretations of dissociations between implicit and explicit bias; (c) there is no basis to expect strong unconditional relations between implicit bias and behavior; (d) implicit bias is less (not more) stable over time than explicit bias; (e) context matters fundamentally for the outcomes obtained with implicit-bias measures; and (f) implicit measurement scores do not provide process-pure reflections of bias.124

In 2022, Gawronski also reviewed evidence that there is no evident equation between implicit bias and bias on implicit measures.125 In the same year, Cesario provided a forceful argument that there is no solid evidence that implicit bias, if it even exists, “plays a role in real-world disparities.”126

The collective intellectual demolition of implicit bias theory is astonishingly thorough—and matched by the parallel demolition of the Implicit Association Test.

Critiques of the Implicit Association Test

Researchers also have provided evidence for equally devastating problems with the effectiveness of the Implicit Association Test (IAT). These are as comprehensive as the foregoing critiques of implicit bias theory and are also best listed individually. A summary of the critiques of the IAT includes:

- Anselmi (2011) notes that the IAT’s reliability as a way to measure implicit prejudice is reduced because “positive words increase the IAT effect whereas negative words tend to decrease it”;127

- Blanton (2009) argues that the IAT provides little or no predictive validity for discriminatory behavior;128

- Blanton (2015a) further concludes that a significant component of what the IAT measures is random noise and trial error;129

- Blanton (2015b) provides evidence that “the IAT metric is ‘right biased,’ such that individuals who are behaviorally neutral tend to have positive IAT scores”;130

- Blanton (2017) argues that the IAT’s unreliability as a measure of individual implicit bias also will render it an unreliable measure of group-level implicit bias;131

- Bluemke (2009) provides evidence that different materials (“stimulus base rates”) alter IAT effects; the IAT, to a significant extent, measures the test questions and format, not the attitudes of test-takers;132

- Van Dessel (2020) reviews data suggesting that implicit measures research has been compromised by vague and varying definitions of key terms such as automatic and implicit measures and that implicit measures have thus far failed to measure effectively;133

- Fiedler (2006) provides evidence that, although the Implicit Association Test “appears to fulfil a basic need, namely, to reveal people’s ultimate internal motives, desires, and unconscious tendencies,” it has not properly established the evidence for implicitness or association, or for the effectiveness of the test;134

- Forscher (2019) argues that “changes in implicit measures are possible, but those changes do not necessarily translate into changes in explicit measures or behavior”;135

- Hahn (2014) concludes that the subjects of IAT tests have strong abilities to predict their IAT measures, a result that casts “doubt on the belief that attitudes or evaluations measured by the IAT necessarily reflect unconscious attitudes”;136

- LeBel (2011) reviews evidence that implicit measures possess low reliability (=consistency of a measure in multiple uses of a test), which “imply higher amounts of random measurement error contaminating the measure’s scores,” and hence low replicability;137

- Hughes (2023) provides evidence that the Affect Misattribution Procedure (AMP) does not measure implicit effects, since individuals possess a high degree of influences awareness;138

- Oswald (2015) notes that few IAT studies have been done in the real world and that the theory lacks external validity: “No amount of statistical modeling or simulation can reveal the real-world meaning of correlations between IAT measures and lab-based criteria that are in the range of 0.15 to 0.25”;139

- Van Ravenzwaaij (2011) presents evidence that “offers no support for the contention that the name-race IAT originates mainly from a prejudice based on race”;140 and

- Unkelbach (2020) reviews evidence about the IAT: “Building on a Bayesian analysis and on the non-evaluative influences in the EP paradigm, we concluded that implicit measures are more likely prone to false-positives compared to false-negatives.”141

A further body of scholarly literature reviews the evidence critiquing the IAT.142 In 2023, Blanton summarized eleven major critiques of the IAT:

- The IAT has poor test-retest reliability and is contaminated by large amounts of random error.

- The IAT is weakly correlated with other measures of implicit attitudes, indicating it has low convergent validity.

- The IAT is contaminated by method variance. The scoring algorithm for the IAT was designed to remove it, but it does not.

- The IAT was constructed so that it measures relative attitudes towards two objects, rather than an attitude towards a single object. In doing so, the IAT unnecessarily confounds attitudinal dimensions in ways that restrict modeling and inference.

- The metric of the IAT is arbitrary, with an empirically unverified zero point; it cannot support statements of bias prevalence.

- The criteria for classifying people into ordinal implicit bias categories on the IAT website are arbitrary.

- Researchers who employ IAT measures to predict discrimination often report their data in ways that suggest discriminatory bias, when it was not observed.

- The revised IAT scoring algorithm artifactually equates unreliable responding with reduced implicit bias.

- The IAT predicts behavior, on average, no better than attitude measures from the 1960s and early 1970s that caused a crisis in social psychology and forced attitude theorists to re-examine the utility of the attitude construct.

- When statistically controlling for explicit attitudes in tests of IAT predictive utility, researchers routinely employ suboptimal and outdated measures of explicit attitudes, a practice that can inflate estimates of the impact of implicit attitudes on behavior.

- The IAT is confounded by many influences other than implicit attitudes. As such, it can support false and counterproductive narratives about its effects.143